What are foundational models?

Published on June 26, 2025 · 5 min read

What are foundational models?

Foundational models (like GPT-4 and Claude) are AI models that underlie many of today’s most advanced AI tools. They form the foundation of modern artificial intelligence by learning from large, general datasets to build a broad understanding of the world. They’re not trained for just one task and can be adapted to many different uses.

This is why you may also hear them called general-purpose AI. Foundational models like GPT-4 and Claude don’t show up directly in most user experiences. But they power the tools you might already know, like ChatGPT (which uses GPT-4) or Claude.ai (which uses Claude). Their flexibility is what makes them foundational. They can support everything from writing assistants to customer service bots and AI code reviewers, often with little or no additional training.

In this article, we’ll explore how foundational models work, what they can do, their benefits and limitations, and how platforms like Vertical let users go from consumers to AI creators by building vertical models on top of these general-purpose ones.

How do foundational models work?

To understand why foundational models are so versatile, it helps to understand how they work. What makes a single model flexible enough to write stories, translate languages, generate code, and summarize articles? The secret is in how they’re trained.

How foundational models learn and train

Foundational models use deep learning, a type of machine learning inspired by the brain’s structure. They train on massive datasets. Trillions of words, images, or lines of code from sources like books, websites, and code repositories.

Training is usually unsupervised, meaning the models discover patterns without human supervision. During this training, they learn to predict the next word, fill gaps, or grasp connections between concepts, building a broad understanding of language, images, or code.

Tasks foundational models can perform

Once trained, foundational models can perform a wide variety of tasks, including:

Text generation: Writing articles, emails, or product descriptions

Summarization: Condensing long texts into shorter versions

Translation: Converting between languages with high fluency

Image generation: Creating visuals from text prompts

Question answering: Responding to user queries with relevant answers

Code generation: Writing, debugging, and explaining code

This adaptability is what defines them as general-purpose. A single model, with slight adjustments, can switch between use cases that once required entirely separate systems.

When is a model no longer called foundational but vertical?

A model stops being foundational when it’s specialized. Foundational models train on large, general datasets to learn broad patterns. A foundational model can then be fine-tuned for a specific domain such as in healthcare, finance, or customer support. When this happens, it becomes a vertical or specialized model.

In other words, foundational models are the broad base. Vertical models build on them to serve focused, domain-specific needs. This shift reflects moving from general knowledge to tailored expertise

Popular foundational models and their applications

Now let’s look at some of the most popular foundational models. These models serve as the base layer for thousands of AI applications across industries.

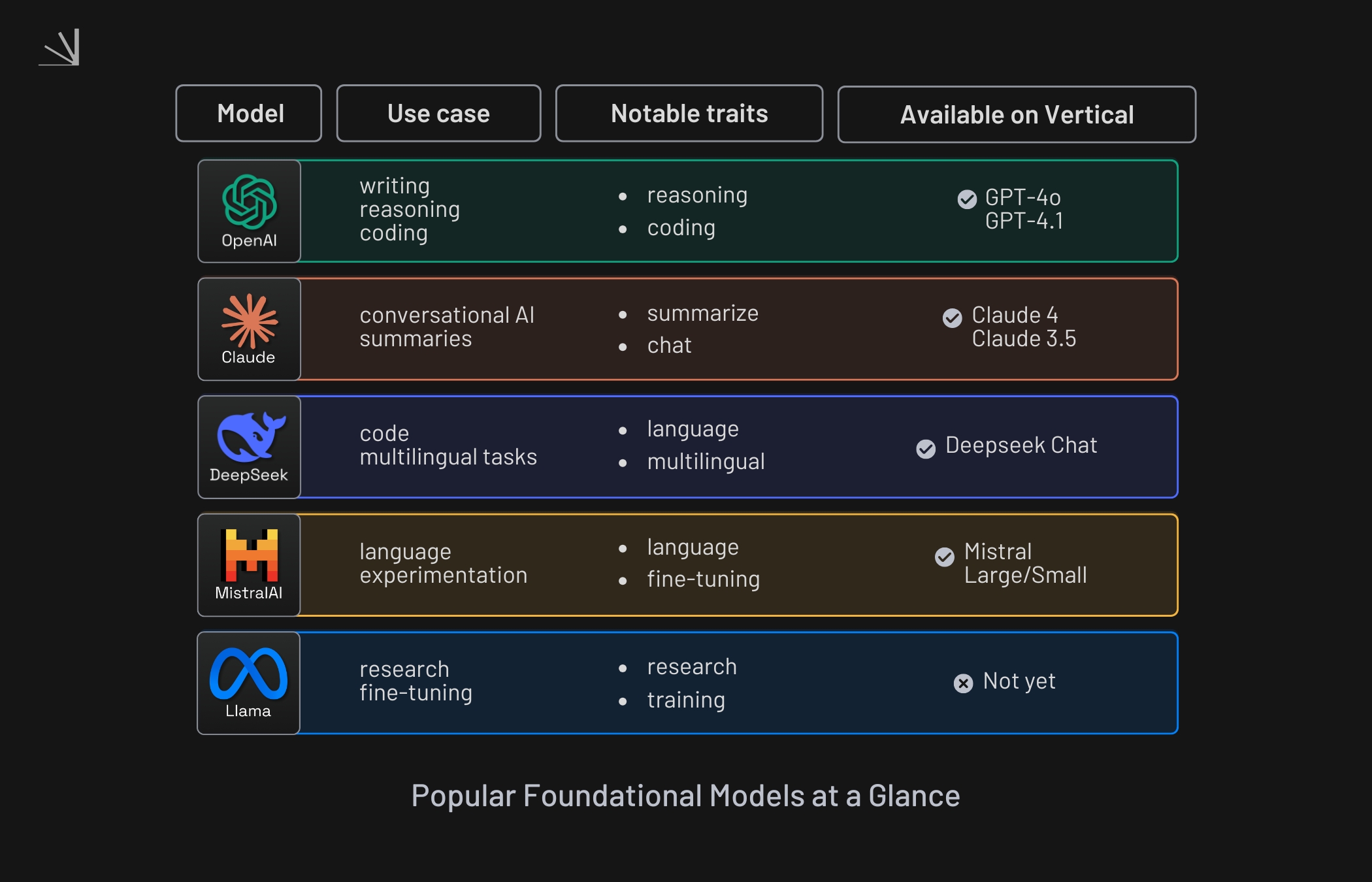

GPT-4 (OpenAI)

Used for: Language generation, reasoning, summarization, coding

Attributes: Multimodal (text and image input), closed-source, strong in reasoning and instruction-following

Available on Vertical: GPT-4o, GPT-4o mini, GPT-4.1, GPT-4.1 Mini, GPT Image 1.

Claude (Anthropic)

Used for: Conversational AI, summarization, safe interaction

Attributes: Focus on safety and alignment, text-only, strong contextual memory

Available on Vertical: Claude 4 Opus, Claude 4 Sonnet, Claude 3.7 Sonnet, Claude 3.5 Haiku, Claude 3 Opus.

DeepSeek (DeepSeek)

Used for: Language tasks, coding, knowledge-intensive tasks

Attributes: Open-source, known for strong performance in multilingual settings

Available on Vertical: Deepseek Chat, Deepseek Reasoner

Mistral (Mistral AI)

Used for: Language tasks, open-source experimentation

Attributes: Fully open-source, small and efficient, often used in fine-tuned custom deployments

Available on Vertical: Mistral Large, Mistral Small

LLaMA (Meta)

Used for: Research, language modeling, custom fine-tuning

Attributes: Open-weight model family, widely used for academic and enterprise experimentation

Available on Vertical: Not (yet)

These models represent a diverse ecosystem. Some prioritize openness and community use, while others focus on performance and safety. Vertical brings many of these options into one interface, making them accessible for both everyday users and advanced builders.

The challenges and benefits of foundational models

While foundational models offer remarkable capabilities, they also come with some limitations. Understanding both sides is key to using them effectively.

Benefits of foundational models

Scalability: Once trained, a foundational model can be deployed across millions of tasks with minimal adaptation.

Versatility: A single model is a multi-tool for digital tasks that can generate text, code, or image.

Fine-tuning potential: Users can build tailored models for specific domains with relatively low effort.

Transferability: Knowledge gained in one domain can often be applied to another, making them useful in dynamic contexts.

Challenges of foundational models

Computational cost: Training these models requires immense computing power and energy.

Hallucinations: Models can produce answers that sound accurate but are factually wrong or entirely made up. This happens when the model tries to fill gaps in its knowledge.

Bias and fairness: Models can inherit and even amplify biases present in training data.

Explainability: It’s often difficult to understand exactly why a model makes a certain decision or output.

Building on top of foundational models

Foundational models excel at everyday tasks, but their answers can miss the fine‑grained context of your industry or workflow. The next wave of AI innovation solves that gap with vertical models. Specialised systems built on general‑purpose foundations and tuned for one clear job.

Customizing foundational models

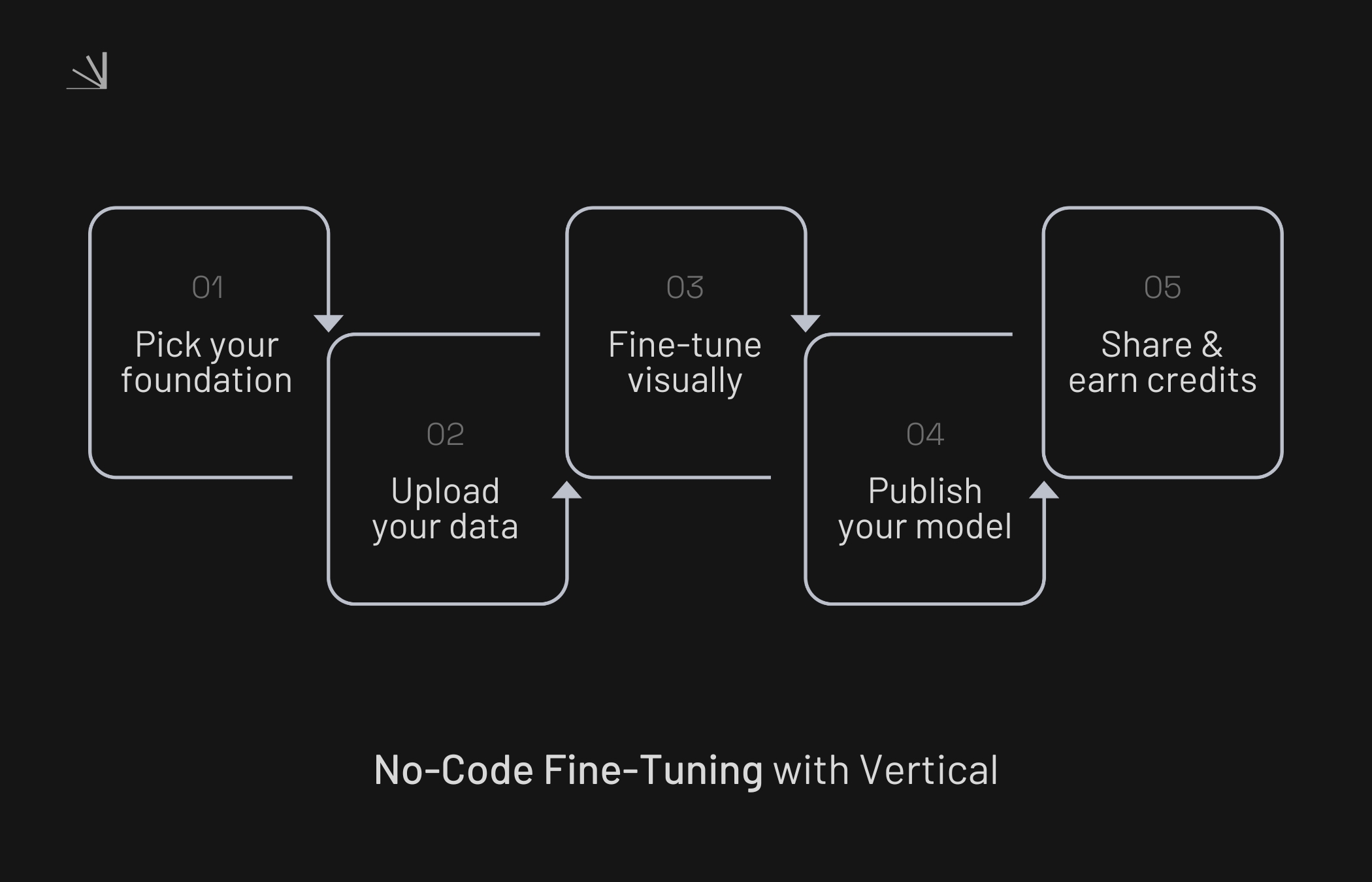

Until recently, tailoring a model required expert developers, prompt engineering, and a lot of coding expertise. Vertical now solves this barrier. We give you the tools to fine-tune foundational models using your own data and expertise. No code required. You don’t need to be a machine learning expert to create an AI that understands your world.

How Vertical enables AI creation at scale

On Vertical you can:

Use foundational models

Use models created by others

Fine‑tune a model yourself

Publish it to the marketplace

Earn credits when others use it

Foundational models are no longer just endpoints. They serve as versatile starting points that anyone can build on to create specialized, impactful AI solutions tailored to your needs.

Foundational models are no longer just endpoints. They serve as versatile starting points that anyone can build on to create specialized, impactful AI solutions tailored to your needs.